Introduction

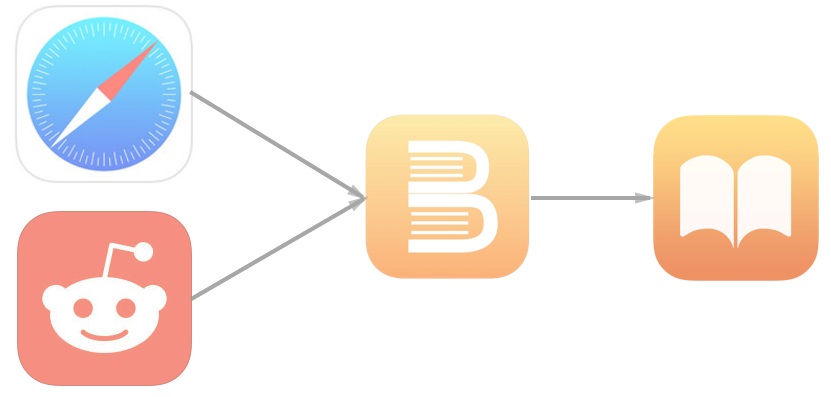

Bind It is an iPhone and iPad app that converts webpages into eBooks that can be stored and read from within the iBooks app from Apple. It is coming soon to the Mac and maybe someday to Android as well. Right now, you can find it

here for $0.99 in the Apple App Store.

Making a successful app takes a lot more than just writing good code. Here I write about what I did outside of Xcode to make Bind It.

Product Development

I frequently come across long form articles that I want to read, but either don’t have the time or want to save it for later. For me it was common to catch an article I wanted to read at work, but wanted to wait until I was off the clock to read it. Sometimes I had a long wait while getting my oil changed or at the doctor’s office and wanted to catch up on those saved articles.

Bookmarks or leaving tabs open didn’t really work so well. Eventually, I ended up with a mess of bookmarks that I considered non-permanent and cluttered up my bookmarking system or I lost the tab I’d kept open for weeks.

My brother lives in a cellular dead zone and if I was watching his kids for him, I had an opportunity to catch up on some reading, but no internet. Besides, articles disappear. They get deleted or moved behind a paywall on the internet. I didn’t know how soon I would get to an article and didn’t want to lose it if I took too long. I needed a solution that didn’t require a constant internet connection, kept my articles organized, and kept them perminantly.

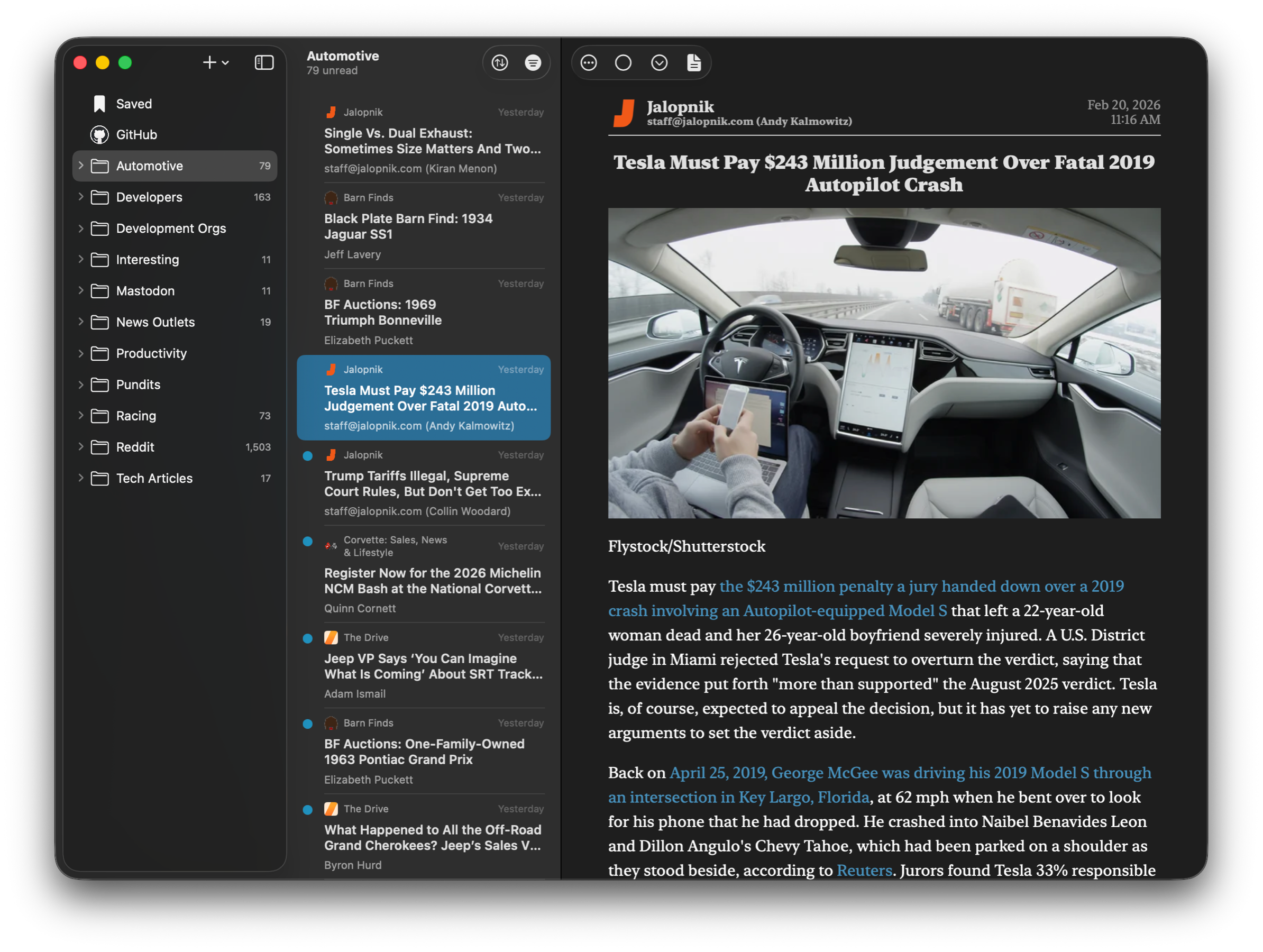

My wife had different needs that I wrote Bind It for. She likes to read amateur horror stories from a subreddit called Nosleep. Some of them are long and she would often lose track of ones she wanted to finish. She also uses the Reddit iPhone app to read them and the text in it is small and hard on the eyes.

eBooks are packages of text and images that are portable. eBook readers, like iBooks, allow you to store these eBooks, manage them, and view them. The reader will scale the text for you and even allow for different viewing themes that work better for day vs. night. eBook readers work to reduce eye strain.

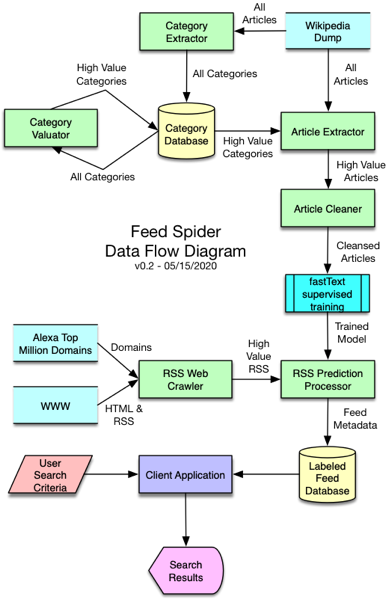

What we needed was an app that created an eBook on the fly from a web page. It needed to figure out which images and text were important. It needed to search for and throw away ads and user comments at the bottom of articles.

Branding

I had originally named the app Book It, which I think is a little catchier. It was so catchy that an online booking company had already caught it. Book binding in the digital world is really what Bind It is doing, so I changed the name to Bind It. As a bonus, the logo I had created for it still worked. I’m mostly happy with the name and logo.

My software companies name also needed to be updated. Vineyard Enterprise Software, Inc worked well enough for the name of the staff augmentation company I started in the 90’s, but didn’t really stand up well now that I focus on web and mobile development. Vincode is shorter and easier to remember, so I trademarked it. It fits the modern web and mobile world better. Apple doesn’t allow you to use tradenames, so until I formally change the name of the company it will show up as the longer, older name for now.

Advertising

A decent idea, app, logo, and name aren’t enough to get any traction in the App Store. You must buy advertising. At $0.99 per sale, of which Apple passes $0.70 to me, spending a lot on advertising isn’t viable.

My current plan is to run a trial advertising campaign on the amateur story subreddits, like Nosleep, to target them specifically. The readers of these subreddits are one of the main two use cases for Bind It, so hopefully conversions will be decent. At $1 per 1000 impressions, it should be affordable too. Based on the initial trial marketing campaign on Reddit, I might roll the campaign out to other short story sites on the web. If anyone has a site that they think would work well with Bind It, let me know. I’ll make sure Bind It works for it and may help support it with advertising.

Web Presence

You must have a website these days and it needs to look professionally designed. I settled on Wordpress as the content management solution for my sites. It is extremely popular with over 25% of the web sites running it. I chose it solely for its popularity, but I was pleasantly surprised dug under the hood to start working with it. There are a lot of themes that are very professional looking to get started with. Making professional sites dynamic functionality is trivial if you have been building dynamic sites for a while.

I registered vincode.io since it is common to use the “io” top level domain for tech companies these days and vincode.com was already registered.

Social

I created a

Facebook page for Bind It. I don’t expect much to be going on over there, but it was easy to create and it lets people in my life have a glimpse into what I do for a living.

This article will also appear on LinkedIn. I’m hopeful that posting on LinkedIn will get a handful of early adopters during the initial soft rollout. This will find more bugs before I start advertising could head off some negative reviews when I ramp up marketing. I’ve already put out two revisions of the app based on early testing.

If any significant social activity springs up around Bind It, it will most likely be on Reddit since I plan on advertising there. I created a Bind It subreddit where I can get feedback from people. Hopefully participation will be higher there since the people I advertise to will likely already have a Reddit account to post under.

The social game for Bind It is a little weak. This is an area that I plan to continue to work on.

Conclusion

I wrote Bind It because it filled a need that I personally had. I want other people to use it as well so I put a lot of effort into the business side of app development. Give it a try.

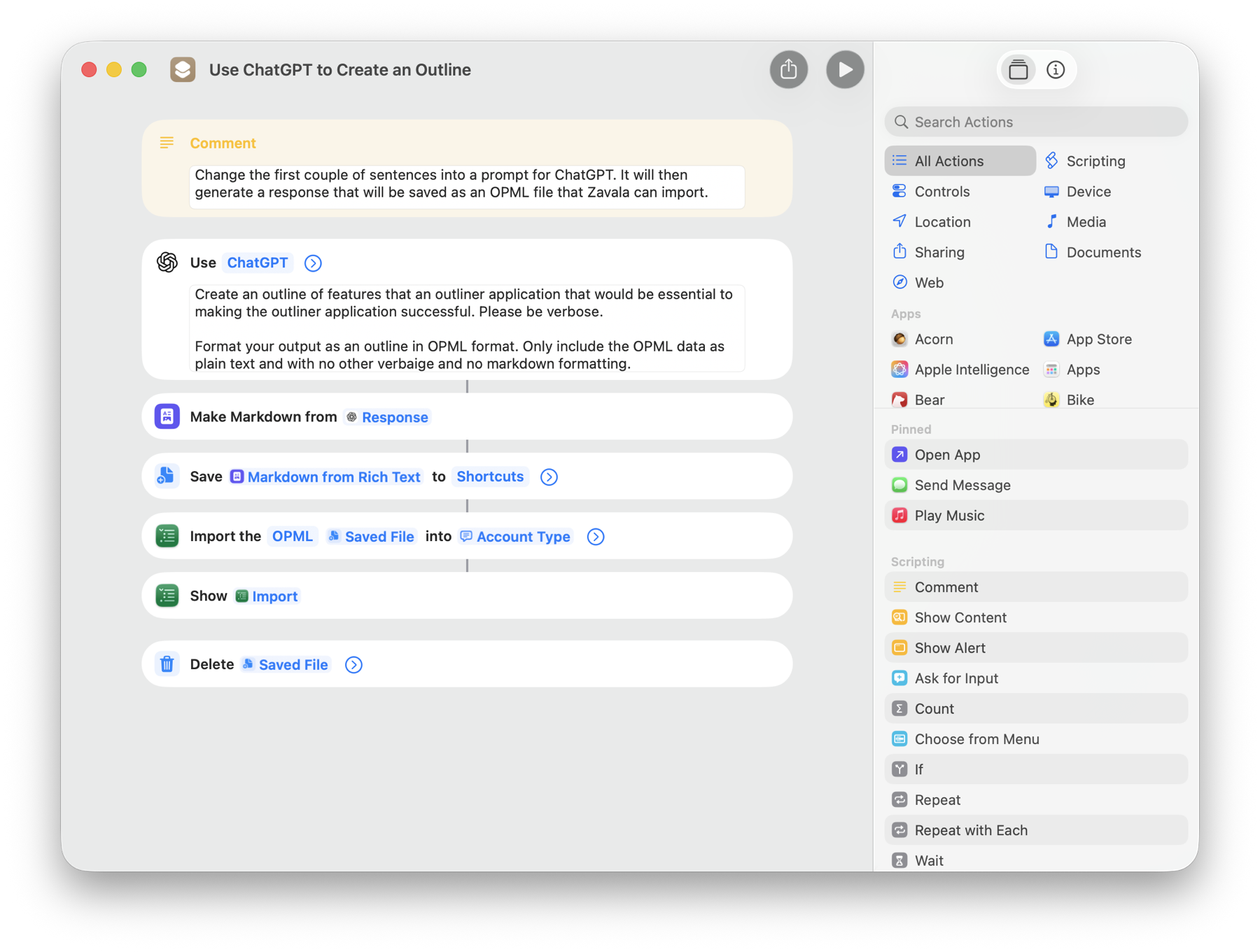

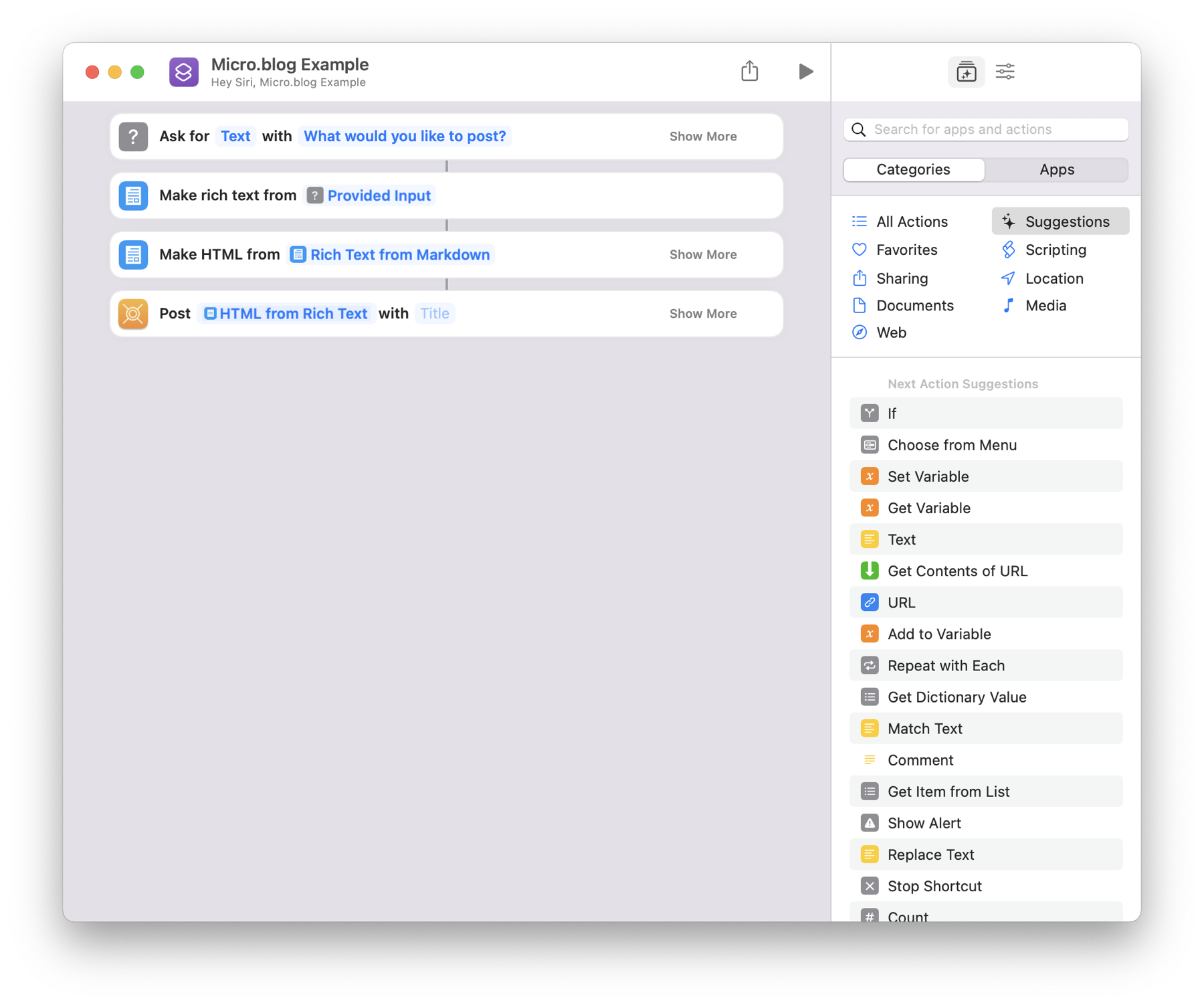

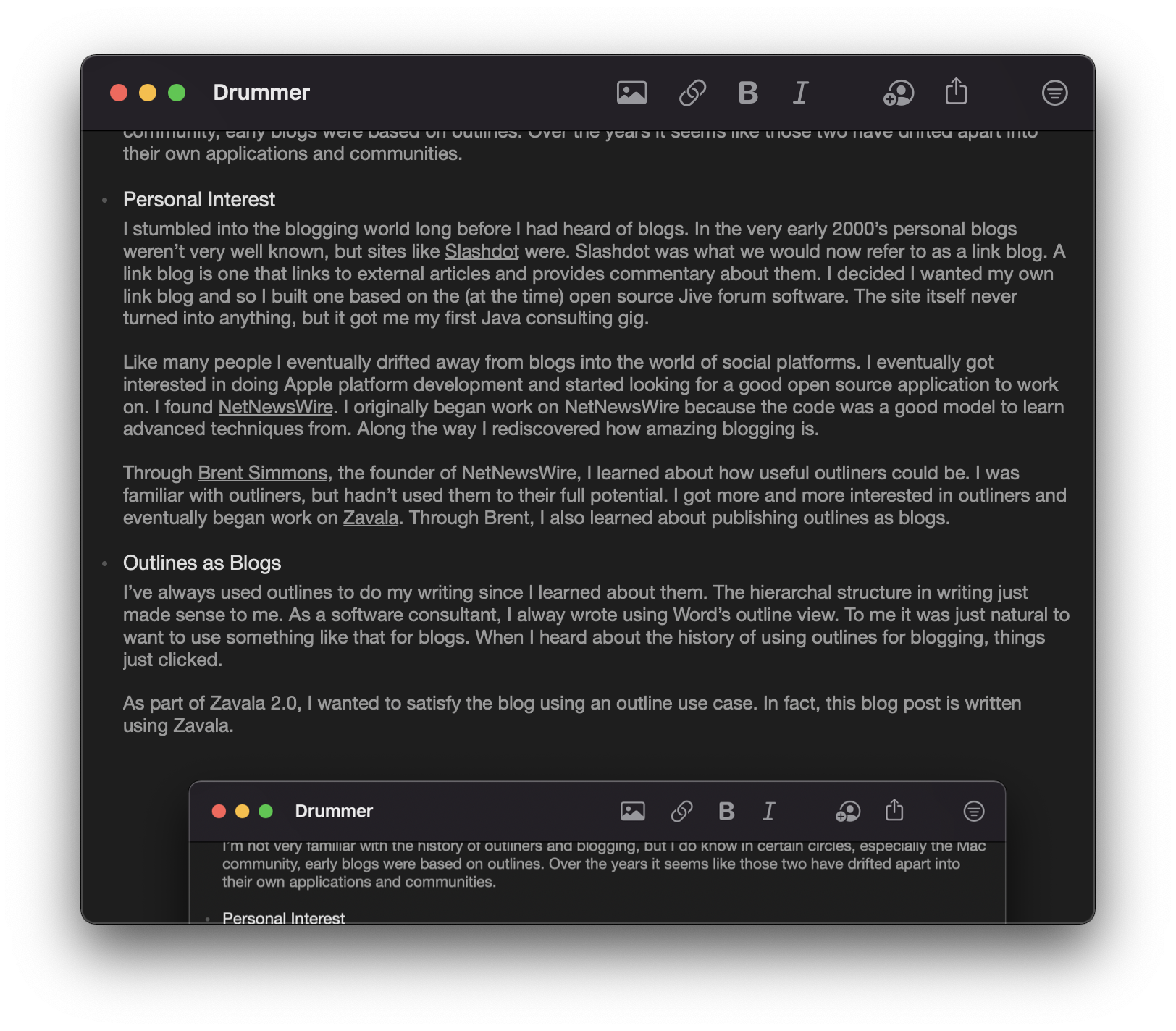

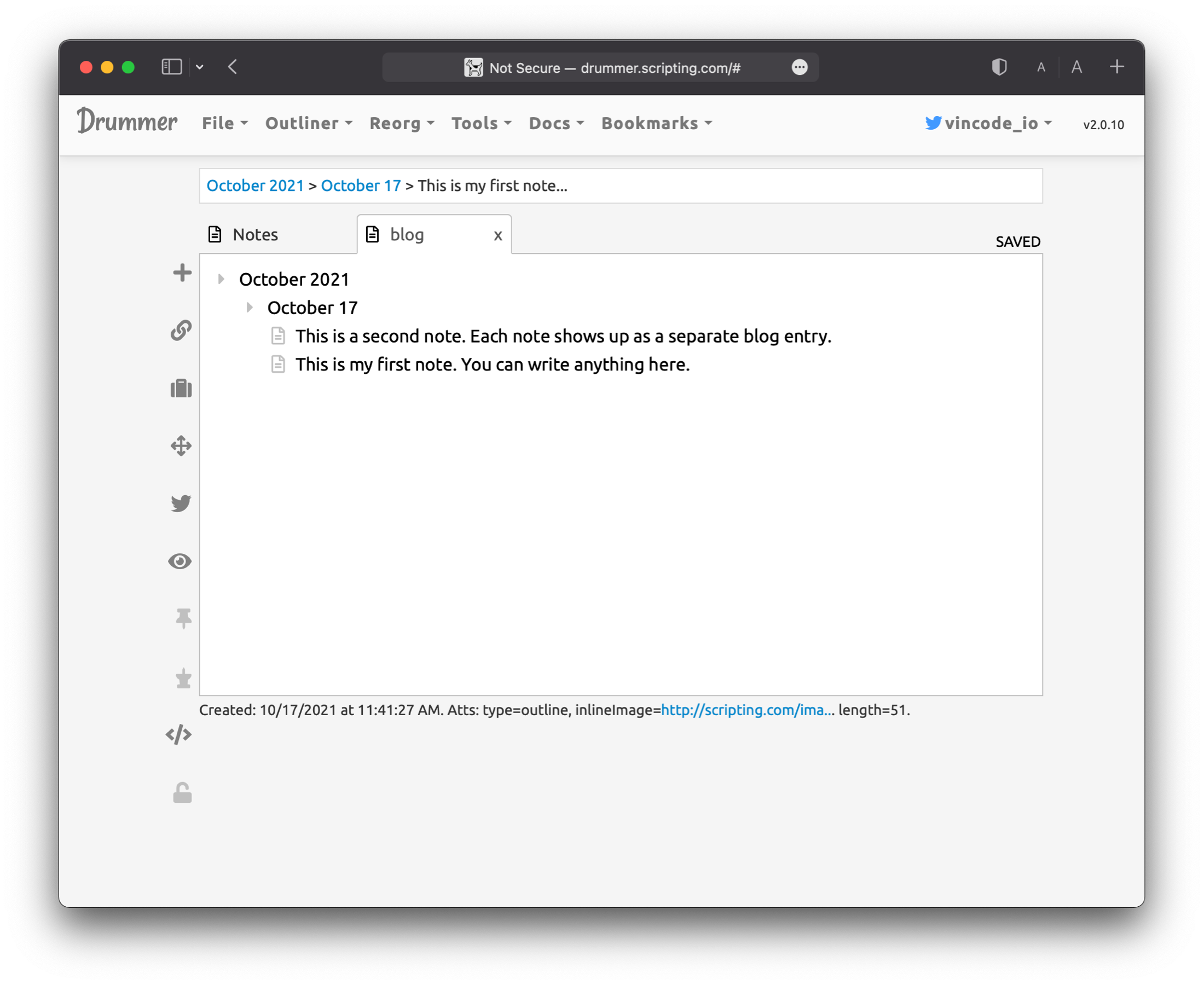

Just modify the first sentence in the ChatGPT box to a prompt you come up with and run it! You’ll get a ChatGPT generated outline in Zavala, ready to edit.

Just modify the first sentence in the ChatGPT box to a prompt you come up with and run it! You’ll get a ChatGPT generated outline in Zavala, ready to edit.

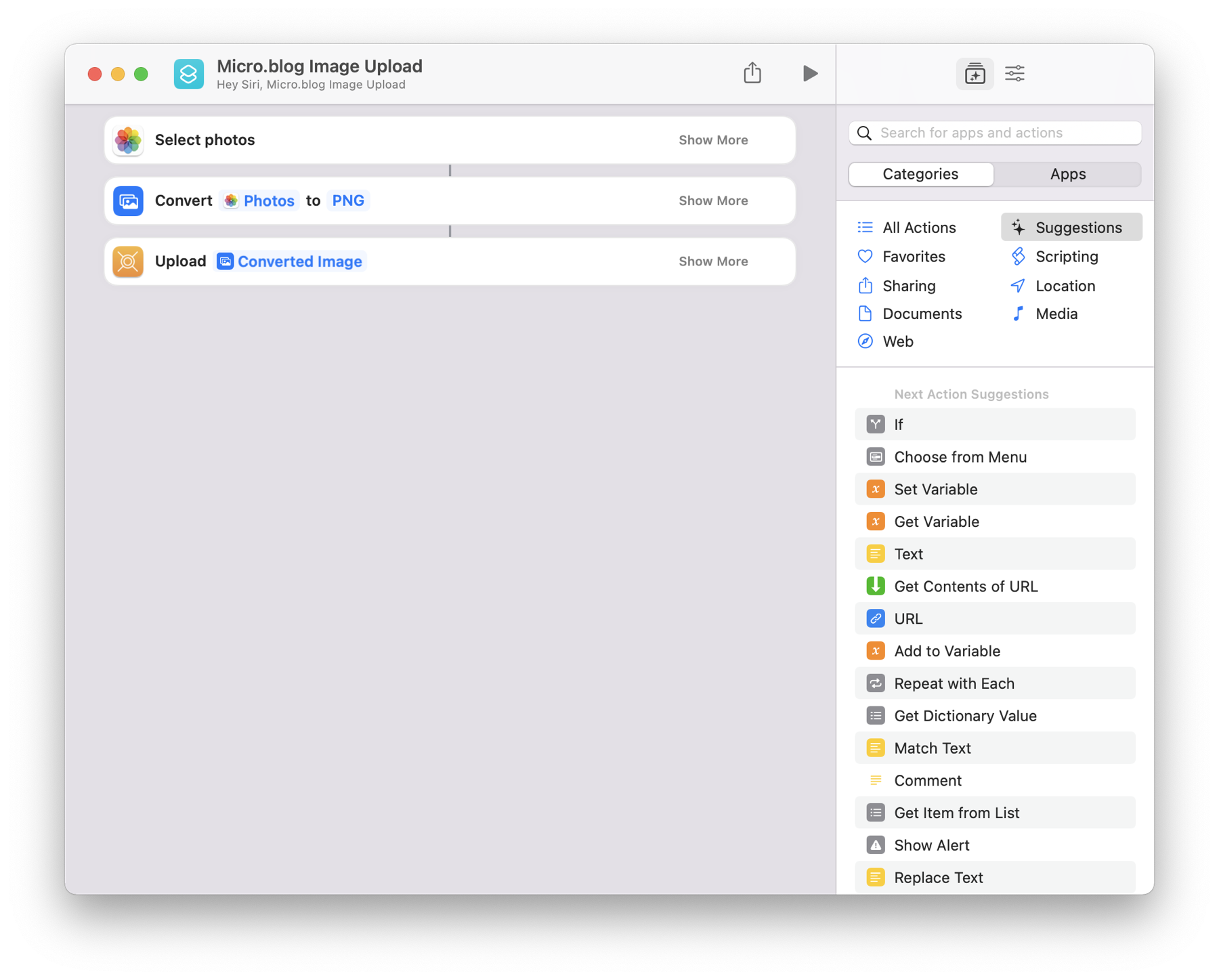

To publish this outline, I run a Shortcut that uploads images and HTML to my

To publish this outline, I run a Shortcut that uploads images and HTML to my

It is important here to point out some terminology differences. A “note” in Zavala is associated with a row. It provides additional detail for the topic. When publishing docs or blog posts from Zavala a topic is a group heading and a note is paragraph text.

It is important here to point out some terminology differences. A “note” in Zavala is associated with a row. It provides additional detail for the topic. When publishing docs or blog posts from Zavala a topic is a group heading and a note is paragraph text.

We settled in and hung out there for a couple of days, only going into Cottonwood to sign up again for Planet Fitness, buy food, and use the internet at the closest Starbuckis. It was surprisingly rainy for being in the desert and mud got everywhere. Eventually we learned to live with it.

We settled in and hung out there for a couple of days, only going into Cottonwood to sign up again for Planet Fitness, buy food, and use the internet at the closest Starbuckis. It was surprisingly rainy for being in the desert and mud got everywhere. Eventually we learned to live with it.