Feed Spider - Part 2

In the first post about Feed Spider we discussed the motivation behind creating a feed directory. We also discussed some software components that can be used to create Feed Spider. Now we’re going to try to tie that all together.

The architecture and design of Feed Spider is at the inception phase. Writing this blog post is an exercise in helping me better understand the problem space as much as it is for communicating what I am trying to do. As they say, no plan survives first contact with the enemy. This plan is no different and I expect it to be iterated over and refined as more gets learned and implemented.

Feedback and criticisms are welcome. Changing a naive approach is easier the sooner it is caught.

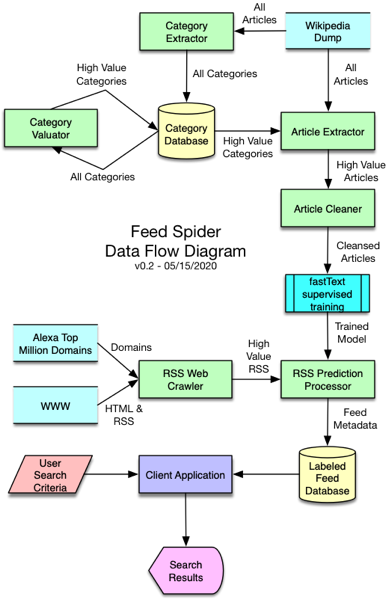

Flow Chart

Pictures always help and sometimes the old ways are best.

Categories

The first problem that we run into with categorizing RSS feeds is what categories to put them into. What are those categories? Wikipedia supplies us with categories associated with an article. The problem is that these categories are hierarchical. Categories can have categories. There are also multiple categories assigned to an article. There are probably thousands of categories in Wikipedia. We will need to roll up the category hierarchy and reduce the number of categories used.

To do so, we will extract the categories used in Wikipedia and load them into a relational database using a new process called Category Extractor. We can then do some data analysis using SQL to get a rough idea of the what the top level categories are and how many articles are under them. Once we understand the data better, we should be able to come up with a criteria for tagging high value categories. A process called Category Valuator will be run against the database to identify and tag the high value categories we want to extract articles for.

Training Data

The Article Extractor process will scan Wikipedia for articles that have the categories that we are looking for. Initially we will pull 100 articles per category and increase that number as needed for the training file. An additional 10% of the records will be pulled in a separate file to use to test the model. The output records will be formatted for use by fastText. Each record will have one or more categories (or labels) associated with the article text.

Training models work better on clean data. For example, capitalization and punctuation can degrade fastText results. We will preprocess the data in an Article Cleaner process.

The clean data will be passed into fastText to train the classifier model. The test file will be used to validate the training model. Training parameters will be tweaked here to improve accuracy while maintaining reasonable performance.

RSS Feeds

Eventually we will seed our RSS Web Crawler process with the Alexa Top 1 Million Domains. Initially however, we will probably test by crawling a blog hosting site like Blogger. We should filter out RSS feeds by downloading them and checking last posted date and content length. This will favor blogs that post full article content over summary blogs. This is necessary so that we have enough content to make a category (label) prediction about the feed.

Feed Database

Our Labeled Feed Database will be generated by the RSS Prediction Processor. This process will call out to fastText to get a prediction of which categories match the RSS feed. It will also extract RSS feed metadata. This information will be combined to generate an output database of labeled (categorized) feed information.

The database should allow for being used as a way to browse by category (label). It should also allow for full text searching of Feed title and/or category.

Search Results

The Labeled Feed Database should be able to be embedded in a client application. An RSS Reader is a prime example of where this could be used. A user interface should allow users to search the database, find feeds, and subscribe to them.

Summary

This high level overview should give you an idea of what we will build and how much work is involved. There is room for improvement. For example, the Labeled Feed Database is just a text search / browsing database. There might be something we could do with Machine Learning to better match search criteria with the labeled feeds.

Now on to implementing, iterating, and learning.