Feed Spider - Update 2

Wow, the Wikipedia category data I loaded was bad. Really bad. I guess that should be expected considering that was the first run of the Category Extractor. Still, I expected better.

I’ve decided to use the same category classifications as Wikipedia does for determining the main topics. The “Main topic classifications” page lists out the top level categories. From there on down, there are subcategory after subcategory of classifications.

For example, if you drill down into “Academic disciplines”, you get a listing if its categories.

I should have been able to query my database after loading it and see that under “Main topic classifications” where all its subcategories. About half were missing. When dealing with 16GB of compressed text, where do you even start? I could see that I was missing the “Mathematics” subcategory, but had no idea where in that pile of 16GB to look.

Eventually, I wrote a program to extract the “Mathematics” category page that had the relationships in it that I was looking for. Then I was able to test with a single page instead of millions to find some bugs. As was typical for me, my logic was sound, but I had made a mistake in the details. I’d gotten in a hurry and made a cut and paste error when assigning a field to the database and was inserting the wrong one. A little desk checking might have saved me half a day or so of debugging.

I fixed my bug and started up the process again. Since I’d added another constraint on the database to improve the quality of the category relationships, the process was running slower than it had before. It was now taking about 2 or 3 hours to run. I never stick around to time it, so I fired it off and went to bed.

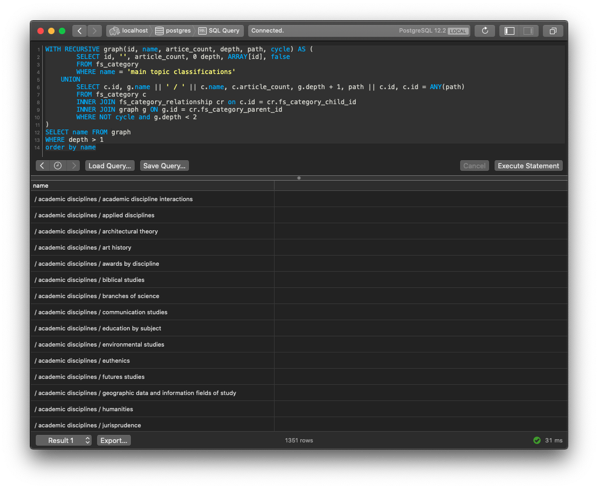

This morning I got up early and checked the data. It looks good now!

I’m able to recreate the category relationships in the Wikipedia pages. Nothing is missing! What you see in the query in the above screenshot is grabbing all the subcategories for “Main topic classifications” and their subtopics. This yields 1351 topics. I think that is a reasonable amount of labels to train the model with. At least that is a good starting point to see how it will shake out.

I’m envisioning having a way for users to select “Academic disciplines” and then choose from the resulting list, “Biblical studies” and then see a listing of blogs that fall into that category. They should also be able to search for “biblical” and get the same thing. Possibly we could even get to the point that searching for “bible” turns up the correct blogs using word vectors.

Now that I can go down from “Main topic classifications” to the categories I want identified, I have to go the other way. If a page has the category “Novelistic portrayals of Jesus”, I need to be able to roll that up to “Biblical studies”. After that gets figured, I can begin extracting articles for the training model.