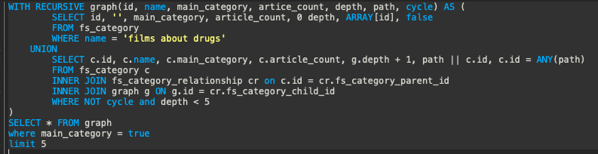

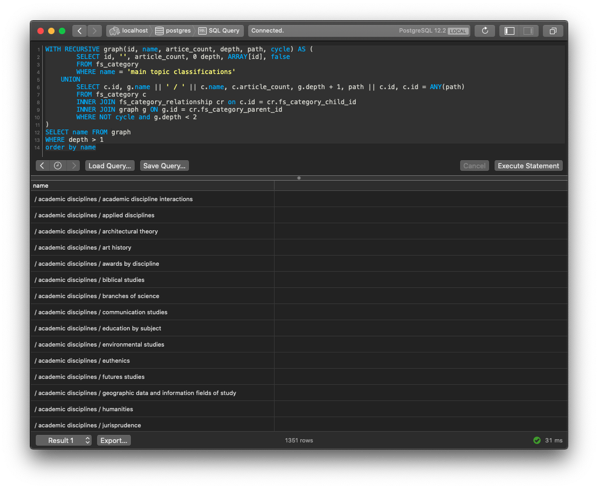

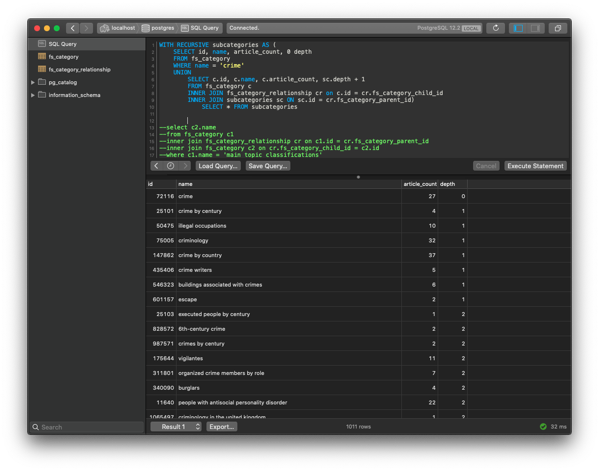

Yesterday, I had just gotten the categories and category relationships loaded into the relational database and identified the categories I want to use for blogs. The next step was rolling up all the hundreds of thousands of categories into those roughly 1300 categories.

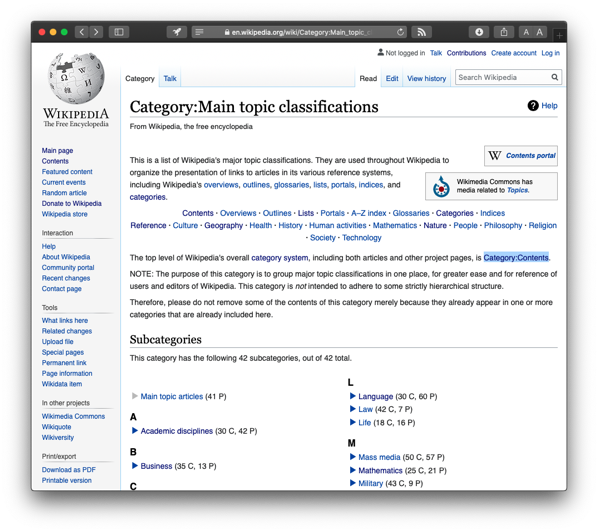

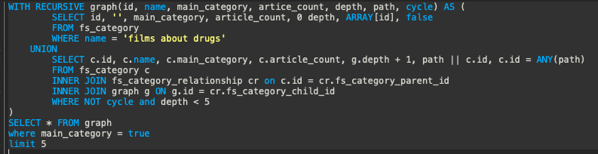

I came up with a query that I thought would work. This isn’t easy because Wikipedia’s categories aren’t strictly hierarchal. They kind of are, but it is really more of a graph than a hierarchy. What I mean by this is that a specific article can have multiple top level categories and there are many paths to get there. You can see this if you click on one of the categories at the bottom of a Wikipedia article. It will take you to a page about that category and at the bottom of that page is more categories that this one belongs to. Since there are more than one, the path to the top isn’t obvious and is plural.

One pitfall to walking a graph like this is getting stuck in a loop. For example category A points to category B, which points to category C, which points to category A. Another problem is getting back to too many top level categories. Finally, you have to deal with the sheer number of paths that can be taken. Say the category we are looking for has 5 parent categories and those have 5 each and those have 5 each. That’s 125 paths to search after only going up 3 levels.

In the end I put code in to limit recursion, limited to 5 resulting categories, and only 4 levels of searching upwards. The query still takes about 700ms to run which is very slow. That is not good.

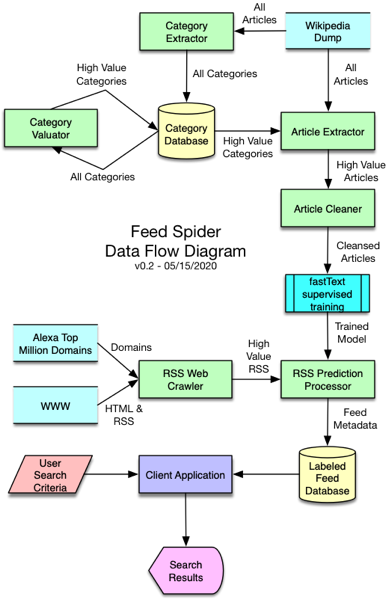

When building a complex system it is important to address architectural risk as early as possible. I’m sure you have heard stories of projects getting cancelled after spending months or even years of development. Lots of times this is because a critical piece of the architecture proved unviable late in the project. Tragically a lot of work has usually been done that relied on that piece before discovering that it won’t work.

The biggest piece of architectural risk in this system is the machine learning parts. We want to get to that as quickly as possible so that we don’t end up doing work that may end up being thrown away. So I decided to move on to building the Article Extractor instead of optimizing the query or even trying to make it more accurate. We can come back to it later after risk has been addressed.

The Article Extractor being very similar to the Category Extractor didn’t take long to code and test. Its job is to read in the Wikipedia dumps for an article, assign the roll-up categories, and write it out to a file. Since it relied on the slow query for part of its logic, I knew it would be slow. So I fired up 9 instances of the Article Extractor and let it run for about 14 hours.

When I finally checked the output of the Article Extractor it had produced only 68,000 records. That isn’t very much, but should be enough for us to move on to the next step. We can go back and generate more data later if this doesn’t prove sufficient.

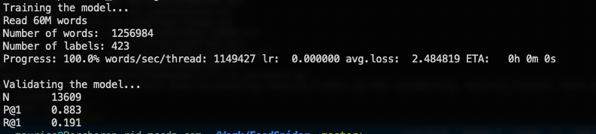

The next step is to prepare fastText to do some blog classifications by training the model. I don’t know much of anything about fastText yet. I’ve bought a book on it, but haven’t read it. To keep things moving along, I adapted their tutorial to work with the data produced thus far.

I wrote the Article Cleaner, see flowchart, as a shell script. It combines the multiple output files from the Article Extractor processes, runs it through some data normalization routines, and spits the result 80/20 into two separate files. The bigger file is used to train the model and the smaller to validate it.

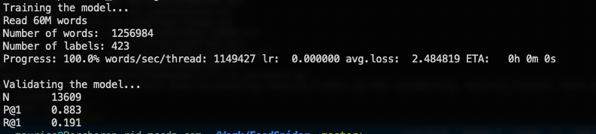

Supervised training came next. I fed fastText some parameters that I don’t understand fully, but come from the tutorial, and validated the model. I was quite shocked when the whole thing ran in under 3 minutes. The fastText developers weren’t false when naming the project.

The numbers are hard to read, but what they are saying is that we are 88% accurate at predicting the correct categories for the Wikipedia articles fed to it for verification. In the tutorial, they only get their model up to 60% accurate, so I’m calling this good for now. Almost assuredly, our larger input dataset made us more accurate than the tutorial. Eventually, I’ll do some reading and hopefully get that number even higher.

Now, it is almost time for the rubber to hit the road. Next step is to begin feeding blogs to the prediction engine and see what comes out. At this point, I’m not too concerned that about the machine learning part working. I’m mostly concerned that the categories that we’ve selected won’t work well for blogs. I guess I’m about to find out.